The WiiMersioN project is the first in a set of three projects (all carrying the “WiiMersioN” name) I created using Nintendo Wii controllers as a type of “augmented” reality through real life motions corresponding to the same motions onscreen. With these projects I was basically challenging myself to see how closely I could mimic real life through video games. To think of it in extreme terms, I was trying to accomplish full virtual/augmented reality by using the tools at my disposal.

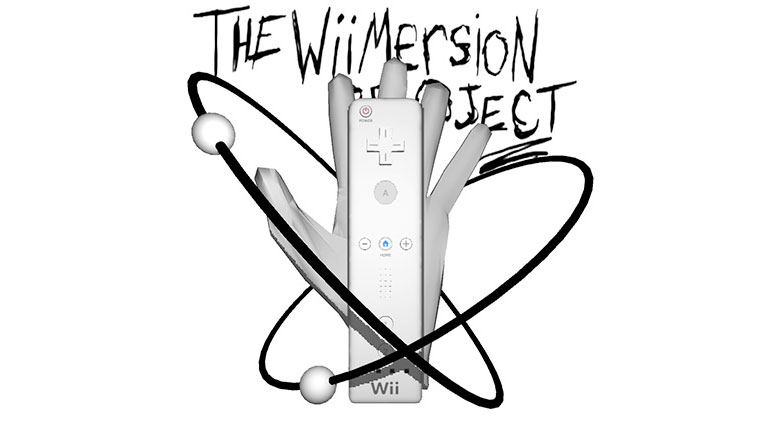

The WiiMersioN Project begins by displaying the 3D-rendered logo along with onscreen instructions prompting players to connect a Wii remote to the computer via bluetooth. The game alerts the player when the Wii remote successfully connects then prompts him or her to connect a second Wii remote. After both Wii remotes are synced, the player can press the + button to begin the game.

The game is an interactive crystal image. The player holds one Wii remote in each hand in real life in order to control the virtual hands onscreen. There are also two virtual Wii remotes on a table that the player can pick up (while using the real Wii remotes to instruct the virtual arms to do so). Lastly, there is a TV screen past the table. The TV displays exactly what the player is looking at—the game! Of course inside that game is another instance of the game, and so an and so forth.

The only objects I didn’t model are the two Wii remote models. As far as coding, I found a plugin for Unity that allowed connection with Wii remotes, but I modified it, added to it, and used it to fit my needs.

ORIGINAL DOCUMENTATION

Original Idea

(In an e-mail to professor)

Hi, Jack, this is Adam Grayson. I’d just like to run this project idea by you before class Tuesday to see what you think.

So what I’ve been thinking is that I can make a game that implements two Wii remotes. The player would hold one in eaach hand. These Wiimotes would correspond to two arms/hands that are on-screen. The movement of the virtual hands would respond to the movements of the player’s hands via the two Wiimotes. So, for example, if the player moves his/her left hand forward, the left virtual hand would move forward. In order to grab objects in-game, I was thinking that the player could press/hold the B button on the Wiimote. This button is on the bottom of the controller as a sort of trigger. I think this is a good simulation of one grasping something. So when the B button is pressed, the virtual hand makes a grasping gesture; if some object is in front of the hand when the button is pressed, the object will be picked up. In order to release the object, the player would release the B button.

The movements of the virtual hands would respond to the three axises of direction that the player can move the Wiimotes as well as rotational movement.

I’m not sure if I will be able to implement this into the game, but later this year, Nintendo is coming out with the Wii Motion Plus. This is a device that plugs into the bottom of the Wiimote and more accurately interprets movement made by the player. As it stands now, the Wiimote does not offer true 1!1 movement, however, with the Wii Motion Plus, 1:1 will be possible. Obviously this could help me in interpreting movements by the player, but I’m not sure if I’ll be able to implement this as there is no release date for the device other than sometime this year.

What I’m not sure about as of now is how this technology would be used in the game. Perhaps it could just be a sandbox game where you are put in a certain open environment and there are objects around that you can interact with. Maybe I could simulate drawing with this, so that you could pick up a piece of paper and a pencil and “draw” (you would see the virtual hand drawing rather than just lines appearing on the paper). I could base this around some sort of puzzle that must be solved.

I think what I really like about this idea is the immersion aspect of it. With the use of the controls and the fact that the player can see “their own” hands in the game, I think this brings the player into the game more than usual. I don’t know if this will come up, but I’d also like to implement a system that allows the player to see themselves if they look into a reflective surface. Usually in games, if the player looks into a reflective surface, such as a mirror, the modeled character is seen in the reflection. Instead of doing this, I’d like to use some sort of camera that looks at the player and feeds the video into the game in real-time. So, say someone were to play this on my laptop (it has a built in iSight). The iSight would be recording video that would be fed into the game. If the player looked into a mirror, they would not see a rendered model, but rather see themselves in real life via the iSight. Perhaps I could also implement some sort of chroma keyer in order to get rid of the background environment (real life) that the player is in and put in the background of the virtual environment.

Concept

The concept behind this project is to recreate human movement in a game scenario, focusing on the movement of the arms, hands, and fingers of an individual. In order to do this, I am planning on using two Wii remotes as game controllers. One remote will be held in each hand. The remotes will correspond to the movements of the in-game arms and hands through the use of rotation, motion-sensing, and IR sensing.

This will be a 3D-modeled game in a semi-confined environment (in a room; size has yet to be decided). As of now, the style of the visuals is planned to be realistic, however it is not set in stone and may change throughout the project development.

In addition to moving the character’s upper limbs, the player will be able to move the character around this virtual environment.

Controls

As of now, there are two planned stages of movement: Free Movement and Held Movement. Free Movement would be the normal movement of the in-game limbs as the player moves the character around the space. In this mode, the player will be able to move the arms of the character (again through rotation, motion-sensing, and IR sensing). Held Movement will be the item-specific movement of the limbs after the player has picked up an in-game object. For example, if the player were to pick up a virtual NES controller, the movements and button presses of the remotes will more specifically correspond to the fingers rather than the previous control over the entirety of the arms.

Transition from Free Movement to Held Movement

The player begins in Free Movement. In order to enter into Held Movement, the player will press the A and B buttons on the Wii remote. Each hand is controlled independently, so one hand can be in Free Movement while the other is in Held Movement.

Held Movement Specific Controls

Held Movement, while being primarily the same, will differ slightly depending on what object the player is holding in-game. Following the example given above, if the player picks up a virtual NES controller, Held Movement will be entered. An NES controller is primarily controlled with the thumb of each hand. Because of this, either the rotation or IR of the Wii remotes will be used to move the corresponding thumb (it will be decided later which control scheme works better). Moving the Wii remotes in this fashion will move the thumbs in a two-dimensional plane hovering over the NES buttons. In order to make the thumb press down, the player will press the A button on the Wii remote. Depending on whether the thumb is currently over a button, the thumb could either press down the button or press down on the controller, missing the button. In order to release the NES controller (exiting Held Movement) and return to Free Movement, the player will press the A and B buttons on the Wii remote simultaneously. Again, this can be done separately with each hand if so desired.

Character Movement

This area is still under development. I realized that the player is already using both hands to control the virtual arms. In addition to this every function of the Wii remote is being used for this as well, so there are no functions available to control character movement around the virtual space. I have thought about implementing the Wii Balance Board to solve this problem. The Balance Board is able to sense the weight distribution of a person standing on it. So, for example, if the person is leaning to the left, the Balance Board detects this. Through this detection of body movement, the in-game character could be controlled. However, as is tradition now, there are two ways to move a character –directional movement and rotational movement (these are controlled with the dual-joystick setup, or, in the case of computer games, the mouse and the directional/WASD keys). The problem is that the Balance Board could only be used for one of these controls, not both. So this method could only control the way the character turned OR the way the character walked.

There is a head-tracking technology available for the Wii and its remotes. This technique uses IR sensing. With this, it is feasible to control the rotational movement of the character in addition to the Balance Board which wold control the directional movement. This would be an ideal situation, but I am not sure if it is realistic for this particular project.

Again, these are ideas, and this section is still under development.

Additional Ideas

An idea that may or may not be implemented into the game is the use of a webcam which feeds video directly into the game. If the player moves the character in such a way so as a reflective object is seen on-screen, the object will not reflect the in-game character’s 3D model, but will instead “reflect” the video being received from the computer’s webcam. This webcam will be facing the player while he/she is playing. Thusly, the video will be of the player in real life; this will be what is reflected in the reflective object. This is to suggest that the player him/herself is the character in this virtual environment rather than a fictitious 3D character.

Progress Report and Extraneous Notes

3/17/09

- began working on 3d hands in maya

3/26/09

- rotation works (simple C# console output)

- X and Y AccelRAW data states make sense

- seem to vary from 100-160

- rest state is 130

- not sure what Z does yet… (don’t think i’ll need it)

- X and Y AccelRAW data states make sense

- rotation will be used for rotation of the wrist and arm

3/31/09

- built simple arm and hand for use in testing uniWii and the results i found last week

- applied wiimote script packaged with uniWii to arm

- able to control arm through tilt/accelerometer in wiimote

- pitch and roll/X-axis and Z-axis rotation

- able to control arm through tilt/accelerometer in wiimote

- applied wiimote script packaged with uniWii to arm

- made a script that would allow suspension of arm movement in return for hand-only movement when the A button was pressed

- hand moved around same pivot point as the arm, so is looked odd

4/2/09

- looked into using two wiimotes

- couldn’t really find anything

- tried to connect two just to see what would happen

- both connected perfectly, no extra work involved

- applied working method to two arms/hands

- operated with two wiimotes

4/7/09

- began to look into operation via IR position rather than accelerometers

- began reading through the uniWii/wiimote code to understand what was going on

- realized where the rotations were being made in the code, but wasn’t certain as to the specifics

- did some research on the methods/variables used around the piece of code

- got the gist of how the code worked

- tried to implement IR into the rotation code

- no luck

- code was getting a little complicated so i started a new scene with just a cube and worked on trying to move the cube with IR

- began reading through the uniWii/wiimote code to understand what was going on

4/14/09

- according to the code output, i got the IR to work, but i was seeing no visible sign of it working

- after much research and trial and error, i realized that the measurements being returned were between -1 and +1

- thusly, i WAS rotating, just by very small amounts

- after much research and trial and error, i realized that the measurements being returned were between -1 and +1

- created a separate pivot point for the hand

- hand now rotated in a more realistic fashion

4/16/09

- worked on getting the arms to not rotate so many times when the cursor went on/off screen

- realized that, while i was multiplying the IR by 100 (because the RAW measurements were between -1 and +1), the RAW measurement for being off screen was -100

- so rotation while the cursor was on screen was working fine, but as soon as the cursor went off screen, i was rotating the arms 10,000 degrees

- worked on making use of a variable to stop this over-rotation

- worked on making use of a variable that would return the rotation to 0 is the cursor went off screen

- so rotation while the cursor was on screen was working fine, but as soon as the cursor went off screen, i was rotating the arms 10,000 degrees

4/21/09

- managed to get unity pro trial via email with unity staff

- got it working on my laptop

- began working on project on laptop

- had a lot of trouble getting wiimotes to connect to laptop

- when they did connect, most of the time, the IR functionality did not work

- went through a lot of trouble shooting to try to get this working

- nothing ended up working

- realized i would not be able to use my laptop for unity work

- when they did connect, most of the time, the IR functionality did not work

4/23/09

- worked on different models in maya for possible use with hands in unity (piano keyboard, arcade button and joystick, tv remote, tv/tv cabinet, etc.)

- continued working on 3d hand model

4/24/09

- only able to pick up left wiimote with either hand

- began working on picking up either wiimote with left hand

4/25/09

- lab was closed

- worked on models some in maya

- figured i could do recursion with duplicates of what i already had

- make a hole in the tv where the screen would be, and just place the duplicates behind it

- warp these duplicates according to perspective to make them look correct

- ended up being REALLY huge and REALLY far out despite them looking small

- warp these duplicates according to perspective to make them look correct

- planned on testing this next time i’m in the lab

- make a hole in the tv where the screen would be, and just place the duplicates behind it

4/26/09

- able to pick up either wiimote with either hand

- however, very often both wiimotes would occupy one hand

- as soon as a wiimote touched a rigid body (and B was held on a wiimote), it would automatically go to the hand holding/pressing B

- worked on solving this problem all day

- resulted in nothing but me being confused

4/27/09

- continued working on only being able to hold one wiimote in one hand

- tried many different scripts, mixing variables, commenting out code, etc.

- tried using global variables from a static class

- kind of worked, but when wiimotes were held in both hands, one would not stay kinematic –gravity would instantly affect it, and resulted in not being to hold it

- some more tinkering

- eventually got it to work by using very specific else if statements

- continued working on recursion

- planned to duplicate what’s seen in front of the camera about five times (initial estimate) and move it off screen

- (different from what i had already done)

- each of the duplicates would have a camera that would output to a texture which the previous duplicate’s tv would display

- tv1 would display output from camera2, tv2 would display output from camera3, etc

- continue until recursions could no longer be seen/distinguished

- after much time put into this process, i realized that, because i was duplicating these objects, i couldnt have the cameras outputting to different textures, nor could the tvs display different things

- each camera would output to the same texture, and each tv would display the same thing

- effectively only using two of the duplicates going back and forth rather than using all of them as i had expected

- tried to make “different” scenes in maya

- same scenes, just different named files so i wouldnt be duplicating

- this worked fine, but the game took a massive dive in frame rate

- about 2-3 fps

- same scenes, just different named files so i wouldnt be duplicating

- because of this, decided against using multiple “scenes,” cameras, textures, etc.

- went back to using two cameras with one “scene”

- planned to duplicate what’s seen in front of the camera about five times (initial estimate) and move it off screen

4/28/09

- began working on main screen

- wanted to use the logo i made along with the hand that i had modeled

- rigged the hand in maya and positioned it to match the drawing

- used the wiimote .obj file i had and positioned it to match the drawing

- created rings and balls and positioned them to match the drawing

- created motion paths for balls to move around the rings

- played around with different materials for the objects in the scene

- decided on white black and white lamberts with toon outlines on the balls

- when transfered from my laptop to the classroom computer, the rigged hand mesh resulted in some very messed up geometry

- went back to my laptop and deleted history on hand mesh

- this resulted in a loss of animation of the hand (because i had animated the skeleton)

- fortunately, the mesh kept the pose in which it was rigged, so i simply animated it as the skeleton was animated

- unity did not like the motion paths or the outlines on the balls

- went back into maya to remove the toon effects and hand key every frame of the balls’ paths

- after this, unity did not have a problem with this scene

- import and animation in unity worked fine

- looped animation

- wanted to use the logo i made along with the hand that i had modeled

- during the main screen, i wanted the wiimotes to be used as mice inputs in order to through around some ragdolls or rigid bodies before the actual game

- kind of like a preloader

- looked at dragRigidBody script to see if i could simply replace the mouseDown event with a button press event on the wiimotes

- after some research and a few experiments, i realized that it was too much work, and i would have to basically redo everything i had done for the main game in order for this to work

- decided against ragdoll/rigidbody preloading actions

- continued to finish steps for main screen

- after some research and a few experiments, i realized that it was too much work, and i would have to basically redo everything i had done for the main game in order for this to work

- main screens purpose was to sync wiimotes before game started as well as have cool opening design

- went into photoshop to create text prompts for users to connect and sync wiimotes to game

- placed these prompts onto planes in maya

- originally tried to animate transparency of planes in maya so that they would have a slight flashing animation

- unity did not translate the transparency animation

- then tried to place text around cylinders so that the text would orbit around the hand like the rings

- animated the half-cylinders to rotate

- unity did not like this animation either

- instead of animating where the objects were placed in unity, the objects were moved to different positions while animating (only while animating)

- this position could not be moved

- decided to just use static planes for text prompts

- originally tried to animate transparency of planes in maya so that they would have a slight flashing animation

- began to write script for execution of prompts

- as user synced wiimotes/pressed buttons, prompts would (dis)appear from the camera view

- last prompt/button press loads the game scene

- worked on some final tweaks (removed displayed info from game scene, fixed main screen cursors to say “left” and “right,” took a last look over code)

- added two audio files to project

- one for main screen, and one for game scene

- added script in game scene that returns to main screen if either wiimote disconnects

- built game for final testing

- game somehow (and thankfully) managed to work on my laptop despite it refusing to do so as of late

- built game as OSX universal, windows .exe, and web player

- used game logo as application icon for the three versions

- DONE!

Links To Game

Mac OSX .app Windows .exe Web player

- Note – The web player may not work. I tried it earlier, and I think the problem is the bluetooth. If I can get it to work, I’ll definitely update it.

- Note – The Windows version may have missing prompts in the Main Menu (sometimes they appear, sometimes they don’t; seems dependent on the computer…)

- If you have any trouble with the game, please refer to the instructions